Share

AWS Lake Formation

How it Works

Amazon Web Services (AWS) Lake Formation function makes it possible to set up a safe and sound data lake in a matter of days with minimal effort and time spent. This repository stores all of your data both in its raw form and in a format that has been optimized for analysis. Both of these versions of your data can be accessed at any time. It is centralized, curated, and password protected. By breaking down data silos and mixing different types of analytics in a data lake, you can potentially get insights and make better business decisions. This can be accomplished by merging multiple forms of analytics. At this time, the process of setting up and administering data lakes involves a number of procedures that take up a significant amount of time. It requires loading data from a wide variety of sources, monitoring the data flows, configuring the partitions, enabling encryption and managing the keys, defining transformation jobs and monitoring their operation, organising the information in columnar format, deduplicating redundant data, and matching linked records, among other tasks. After data has been deposited into a data lake, you need to provide granular access to datasets and monitor users’ activity over time using a wide variety of analytics and machine learning (ML) tools and services.

Lake Formation is an incredibly powerful tool that streamlines the process of moving your data into an Amazon Simple Storage Service (S3) data lake. But it doesn’t stop there - it goes above and beyond to ensure your data is clean, classified, and secured with the help of advanced machine learning algorithms.

Once you have collected and catalogued your data from various databases and object storage, Lake Formation kicks into action. It leverages the power of machine learning to clean your data, removing any inconsistencies, duplicates, or errors that may hinder your analysis. The machine learning algorithms employed by Lake Formation are designed to identify and eliminate these issues, providing you with high-quality, reliable data for your analytical needs.

But it doesn’t end with just cleaning your data. Lake Formation takes it a step further by classifying your data, organizing it into meaningful categories that make it easier to understand and work with. By utilizing machine learning, Lake Formation can automatically categorize your data based on its content, ensuring that you can easily access and analyze the information you need. And when it comes to securing your sensitive data, Lake Formation excels. It provides granular controls at the column, row, and cell levels, allowing you to define who can access specific data elements within your data lake. This level of control ensures that only authorized individuals have access to sensitive information, providing peace of mind and maintaining compliance with data privacy regulations.

Functionality

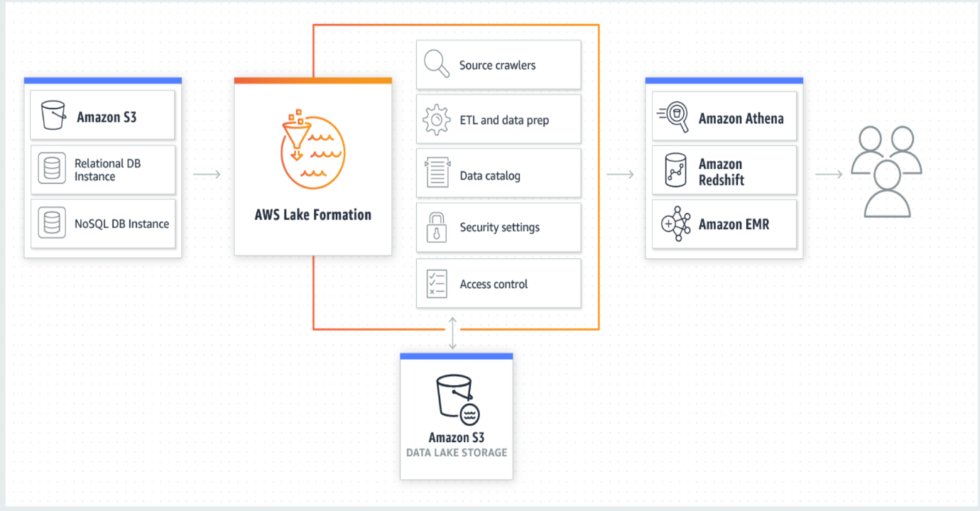

Lake Formation is a tool that provides assistance to you in the process of creating, securing, and managing your data lake. The first thing you need to do is locate any current data storage, whether it’s in S3 or a relational database or a NoSQL database, and then you need to move the data into your data lake. The following step is to crawl the data, then catalogue it, and finally get it ready for analysis. Give your users the option to pick from a number of different analytics providers so that they can have secure access to their data through a self-service portal that they control. Data can be accessed through them not just by the AWS services that are shown, but also by other AWS services and third-party applications. The management of all of the responsibilities shown in the orange box falls under the purview of Lake Formation, as does the responsibility of integrating those responsibilities with the data repositories and services shown in the blue box.

Create data lakes quickly: It is now much easier to move, store, categorize, and clean data if you use Data Lake Formation, which allows you to create data lakes in a much shorter amount of time than in the past. Lake Formation will automatically crawl all of your data sources and move the data into a new data lake that is hosted on Amazon S3. The Lake Formation service divides up the information stored in S3 into manageable bits and organizes it based on commonly searched for terms. In order to do analyses more quickly, the data are converted into formats such as Apache Parquet and ORC. Lake Formation also has the capability to deduplicate records and locate matching records, which are defined as two entries that relate to the same thing. This helps to improve the quality of the data collection as a whole.

Simplify the management of security: Lake Formation allows you to define and enforce table, column, row, and cell access controls for all users and services that access your data. Consistent policies are implemented across all AWS services like Redshift, Athena, AWS Glue, and EMR for Apache Spark. This eliminates the need to manually configure policies for security, storage, analytics, and machine learning across all AWS services. This saves time and ensures uniformity in enforcement and compliance across all of the services that utilize it.

Self-service data access: You can create a data catalogue that includes all datasets and the people who have access to them using Data Lake Formation. Increased productivity is achieved by helping your users find the most relevant data for their analysis. Security is constantly being enforced by Lake Formation to keep your data safe for analysis and research. They may now analyse many datasets in a single data lake using EMR for Apache Spark, Redshift, Athena, AWS Glue, and Amazon QuickSight. In addition, users can mix and match services without having to transmit data between silos.

Permission controls: In AWS Lake Formation, there are a few key roles that you can use to manage permissions effectively:

- Service-Linked Role

This role comes with built-in permissions, allowing it to call other AWS services on your behalf. It’s designed for specific AWS services to perform actions without requiring manual configuration. This simplifies the management of permissions and ensures necessary integrations function seamlessly. - User-Defined Role

When predefined roles don’t cover all your needs, you can create a custom, user-defined role. This role allows you to set specific permissions tailored to your unique requirements. It offers more flexibility, letting you grant additional permissions that the service-linked role doesn’t cover. - Data Catalog Permissions

You can directly manage permissions on the tables within your data catalog. This involves specifying, granting, and revoking access controls to ensure only authorized users can interact with sensitive data.

What does the AWS Lake Formation include

Import data from existing databases: When you provide the location of your current databases and your login credentials to AWS Lake Formation, the data is scanned. Once the data is loaded, the metadata is stored in a central catalogue. Lake Formation may import data from RDS or EC2 databases on Amazon’s Elastic Compute Cloud (EC2) (EC2). You have the option of loading data in bulk or piecemeal.

Integrate data with other sources: Java Database Connectivity can link Lake Formation to on-premises databases (JDBC). When you log into the Lake Formation console, you may select the data sources you want to import and your login credentials. ETL procedures can be built using AWS Glue to import data from databases other than those listed here.

Import data from different AWS services: Data from different S3 data sources can be imported into Lake Formation in a semi- or unstructured form in the same way. An Amazon S3 bucket inventory should be performed initially. By specifying an S3 path, Lake Formation can read the data and schema included within the data. AWS CloudTrail, AWS CloudFront, Detailed Billing Reports, and AWS Elastic Load Balancing data can be organized using the data lake Formation function (ELB). Custom processes can also import data into the data lake using Amazon Kinesis or Amazon DynamoDB.

Organize and label your data: When customers are looking for datasets, Lake Formation provides a library of technical metadata (such as schema definitions) that has been taken from your data sources. Lake Formation can crawl and read your data to obtain technical metadata (such as schema definitions). Custom labels can be applied to your data (table and column level) to denote elements like “important information” and “European sales data.” Lake Formation allows users to search for data utilizing text-based search over metadata, allowing them to find information quickly.

Data transformation: Transformations like rewriting date formats to guarantee uniformity are possible with the help of Lake Formation. To get your data ready for analysis, Amazon Data Lake Formation creates transformation templates and arranges the processes that will do so. AWS Glue transforms your data into columnar formats like Parquet and ORC for storage. When data is sorted into columns rather than rows, the amount of data that must be analyzed is reduced. Custom transformation jobs can be built for your business or project using AWS Glue and Spark.

Enhanced partitions: Lake Formation optimizes data partitions in Amazon S3 to improve performance and reduce costs. Many unprocessed raw data files may be loaded into partitions that are too small (requiring extra reads) or too large (requiring no more reads) (reading more data than needed). Using Lake Formation, your data can be sorted by size, time period, and/or other important variables. The most frequently used queries benefit from quick scanning and distributed parallel reads.

Enforce encryption: Lake Formation encrypts your data lake with Amazon S3’s encryption. AWS Key Management Service keys are used for server-side encryption in this solution (KMS). Using S3, you can utilize distinct accounts for the source and the destination regions to guard against malicious deletions of data in transit. By using these encryption capabilities, you can relax knowing that your data lake is safe and free to focus on other tasks.

Manage access controls: Lake Formation is a comprehensive solution that simplifies data access control within your data lake. Lake Formation allows you to easily manage and customize security policies for all AWS Identity and Access Management (IAM) users and roles. These policies ensure that the right level of data protection is applied consistently across your data lake.

One of its key features is tag-based access control, which allows you to manage and customize security policies based on tags assigned to your data catalog resources. This approach is particularly beneficial for handling a large number of data catalog resources efficiently. By tagging resources with specific metadata, you can create granular access policies that apply to groups of resources sharing the same tags. This ensures that access permissions are consistently enforced, making it easier to scale your data protection measures as your data lake grows.

AWS Lake Formation is a comprehensive solution designed to streamline the creation and management of data lakes, thus tackling the significant challenges associated with big data environments. By centralizing data access control, Lake Formation provides a unified approach to managing security across different components of your data lake. This means you can define and enforce security policies that apply to various services, such as Apache Spark, Amazon Redshift Spectrum, and AWS Glue ETL.

One of Lake Formation’s pivotal elements is its ability to orchestrate and simplify the integration of various AWS services, such as IAM, S3, SQS, and SNS, into a single, cohesive service. This orchestration is facilitated by a set of preconfigured templates that can be customized to meet specific organizational needs, significantly reducing the time and effort required to configure these services manually.

The integration with IAM enhances seamless authentication and authorization of users and roles within your data lake. As users or roles are authenticated through IAM, Lake Formation automatically maps them to the appropriate data protection policies stored in the data catalog, ensuring that only authorized users have access to sensitive data under the relevant security controls.

Set up audit logging: CloudTrail, a cloud-based Amazon data store, provides detailed audit trails to monitor access and policy compliance. With Lake Formation, you can monitor data access across analytics and machine learning platforms. The CloudTrail APIs and console can access audit logs, just as they can access standard CloudTrail logs.

Lake Formation offers a wide range of APIs and a command line interface (CLI) to integrate its functionality into your custom applications seamlessly. These APIs allow developers to leverage Lake Formation’s capabilities, while the CLI provides a user-friendly way to interact with Lake Formation and perform various operations. Moreover, for those working with Java or C++ development environments, dedicated SDKs are available to easily integrate your data engines with Lake Formation.

Regulated tables: Amazon S3 tables can be accurately injected with ACID transactions. Users see the same data because Governed Table transactions resolve conflicts and mistakes automatically. When querying Governed Tables, you should use Amazon Redshift, Amazon Athena, and AWS Glue transactions.

Data meta-tagging for business: By adding a custom attribute to table properties, you can identify data owners like data stewards and business units. Adding commercial information to the technical metadata can help you understand how your data is used. Amazon data lake Formation security and access controls allow you to set appropriate use cases and data sensitivity levels.

Allow self-service: Lake Formation enables self-service data lake access for several analytics use cases. Access permissions can be granted or denied to tables defined in the central data catalog, which is used by several accounts, organizations, and services.

Find data for analysis:

Lake Formation streamlines the management of your data lake and enhances the ease with which you can discover and integrate data. Initially, Lake Formation automatically identifies all relevant AWS data sources your AWS IAM policies permit access to. This includes thoroughly scanning resources such as Amazon S3, Amazon RDS, and AWS CloudTrail to determine eligible data for your data lake. Importantly, data is only moved or made accessible to analytic services with your explicit permission, ensuring a secure data handling environment.

Once the data is identified and ingested into your data lake using tools like AWS Glue, which supports various sources, including S3 and Amazon DynamoDB, Lake Formation offers a powerful user interface. Users of Lake Formation can use text searches conducted online to search and filter datasets housed in a central data library. They can look for data by name, content, sensitivity, or any other custom label you set. This capability not only simplifies managing large volumes of data but also enhances the accessibility and usability of your data assets.

Combine analytics to gain greater insight: Athena for SQL, Redshift for data warehousing, AWS Glue for data preparation, and EMR for Apache Spark-based big data processing and ML can provide your analytics users with immediate access to the data (Zeppelin notebooks). If you point these services to Amazon Data Lake Formation, you will effortlessly combine different analytical methodologies on the same data.

Pricing

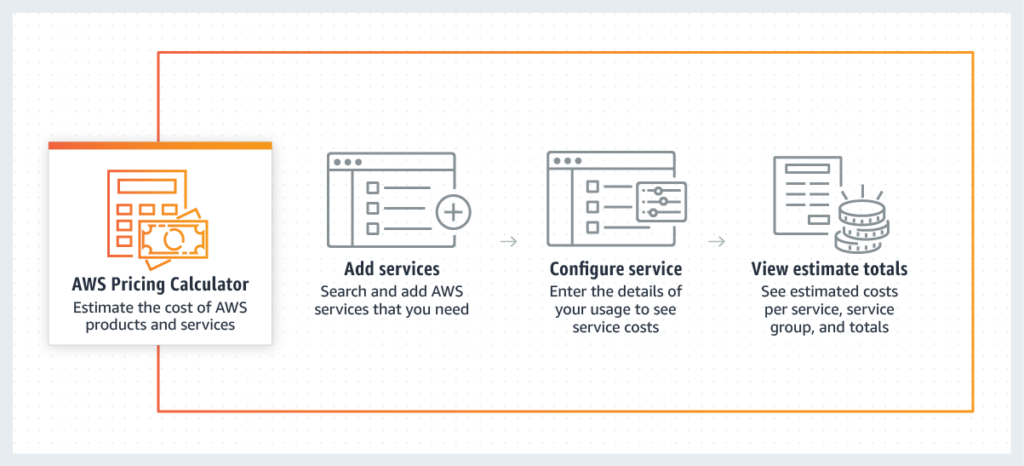

The AWS Lake Formation itself comes at no additional cost, functioning as a wrapper service that reduces complexity and expedites the setup of your data lake. However, while the service simplifies the management of data lakes, it is important to note that you will still be responsible for the costs associated with the AWS services utilized via Lake Formation. This includes, but is not limited to, services for data storage, processing, and transactions.

With Governed Tables, AWS Lake Formation simplifies the process of making accurate changes to many tables while ensuring a consistent view for all users. This feature is particularly useful when dealing with complex data transformations and updates. To manage concurrent transactions and enable rollback to previous versions of tables, AWS Lake Formation stores transaction metadata. While this functionality is essential, it’s important to note that transaction requests and storage of metadata may incur associated costs.

The Lake Formation Storage API plays a crucial role in analyzing the data stored in Amazon S3 and applying row and cell filters before delivering the findings to your applications. This data screening process ensures that only relevant and secure data is accessed. Importantly, the primary functionalities of AWS Lake Formation, including access controls based on databases, tables, columns, and tags, are provided at no extra cost.

Challenges of Management

Data lakes often fail for a multitude of reasons, which contributes to their decreasing effectiveness in business environments. A prevalent issue is the transformation of data lakes into data swamps. This occurs when data is indiscriminately accumulated without adequate management and organization strategies, leading to chaotic and unusable data repositories. Another significant factor is the sheer volume of data that data lakes must manage; users can find it daunting to navigate this extensive information, making it difficult to extract meaningful insights. Moreover, the inability to leverage the stored data effectively for timely and impactful analytics can render data lakes ineffective. This typically happens when data is not refreshed or utilized swiftly enough, causing delays in decision-making processes. These various challenges prompt organizations to reassess the viability and value of maintaining data lakes.

A data lake transforms into a “data swamp” when it is mismanaged or cluttered with excessive, irrelevant data. This degradation occurs when the structure and oversight of the data lake are neglected, allowing unorganized and redundant data to accumulate. In such a state, the data lake loses its effectiveness and efficiency, becoming a repository filled with “junk” that not only reduces its operational value but also leads to increased costs due to the difficulties in managing and extracting useful information. This cluttered environment greatly hinders the decision-making processes, turning what was once a resourceful data lake into a costly and inefficient “data swamp.”

Can I use third-party business intelligence tools with Lake Formation?

Yes, it is possible to use third-party business intelligence (BI) tools with Lake Formation. AWS provides the flexibility to connect to your data sources stored in services like Athena or Redshift through these tools.

By using popular third-party BI applications such as Tableau and Looker, you can connect to your AWS data sources effortlessly. The data catalog underlying Lake Formation takes care of managing data access. Therefore, regardless of the BI application you choose, you can have full confidence that data access is well-governed and controlled. This ensures that your organization can leverage the power of third-party BI tools seamlessly while maintaining data security and governance within the Lake Formation framework.

How does AWS Lake Formation use machine learning to clean data?

AWS Lake Formation leverages the power of machine learning (ML) to efficiently clean data. Through the use of ML algorithms, jobs can be created within Lake Formation that are specifically designed for tasks like record deduplication and link-matching. The process is made incredibly simple, as users only need to select their data source, choose the desired transformation, and provide the necessary training data for the ML algorithms to perform the required changes.

By utilizing ML Transforms, users can effortlessly train the algorithms according to their specific preferences. Once the ML Transforms are trained to a satisfactory level, they can be seamlessly integrated into regular data movement workflows without the need for deep ML expertise.

The overall aim of AWS Lake Formation’s ML capabilities is to provide a streamlined and user-friendly experience for cleaning and enhancing data. It empowers users to effectively and efficiently manage data quality, ensuring the accuracy and consistency of their datasets without requiring extensive ML knowledge.

What is the architecture of an AWS data lake?

The architecture of an AWS data lake involves several components that enable the storage, processing, and analysis of large amounts of unstructured data. The primary storage component is Amazon S3, which allows businesses to store datasets in their original form without prior structuring. This makes it ideal for organizations dealing with highly voluminous or frequently changing datasets.

To enable on-the-fly adjustments and analyses, the AWS Glue and Amazon Athena services are utilized. These tools facilitate the extraction, transformation, and loading (ETL) of data from the data lake. Additionally, they crawl the data to identify its structure, extract its value, and add metadata for better organization and analysis.

To provide governance and enable contextualization of datasets, user-defined tags are stored in Amazon DynamoDB. These tags allow the application of governance policies and enable users to browse datasets based on metadata.

A key aspect of the AWS data lake architecture is the creation of a pre-integrated data lake with SAML providers such as Okta and Active Directory. This integration ensures secure access to the data lake by leveraging existing user authentication systems.

The architecture consists of three major components:

1. Landing zone: This component is responsible for receiving raw data from various sources, both internal and external to the company. No data transformation or modeling takes place at this stage; it simply stores the raw data in the data lake.

2. Curation zone: In this component, ETL processes are performed to transform and model the data. The data is crawled to determine its structure and value, and metadata is added to enhance organization and searchability. Techniques such as modeling are used to improve the quality and usability of the data.

3. Production zone: This component contains the processed data that is ready to be utilized by analysts, data scientists, or business applications. The data is now in a refined state, suitable for direct analysis or consumption by downstream applications.

Overall, the architecture of an AWS data lake enables the storage, processing, and analysis of unstructured data by leveraging components like Amazon S3, AWS Glue, Amazon Athena, DynamoDB, and secure integration with SAML providers. The three major components, landing zone, curation zone, and production zone, provide a structured approach to managing and making use of data within the data lake.

What is a data lake and why do we need it?

A data lake is a centralized repository that allows for the storage of vast amounts of data, regardless of its structure or format. It serves as a hub for all types of data, whether it is structured data from databases or unstructured data from various sources such as social media, IoT devices, or logs.

The main purpose of a data lake is to provide a scalable and flexible solution for storing and analyzing data. Unlike traditional data warehouses, data lakes accommodate data in its raw form without the need for preprocessing or schema design beforehand. This raw data, often referred to as “big data,” consists of valuable information that can potentially drive insights, inform decision-making, and uncover new business opportunities.

One key advantage of a data lake is its ability to capture and retain a vast amount of data. This allows organizations to store and preserve data that may not have an immediate purpose, but could be valuable in the future. By providing a single location for storing all data, a data lake simplifies data management and reduces the need for data silos or multiple data storage systems.

Furthermore, a data lake enables organizations to perform advanced analytics and exploratory data analysis on the collected data. Teams can use various tools and techniques to process and extract actionable insights, which can contribute to research and development efforts, improve decision-making processes, and drive corporate growth. By empowering teams to test and analyze their ideas within the data lake, organizations can foster innovation and uncover new avenues for product development.

Another significant benefit of a data lake is its capability to consolidate and integrate diverse data sources. By merging data from multiple channels, such as customer purchase history, social media interactions, and other touchpoints, organizations gain a comprehensive understanding of their customers. This holistic view enables businesses to personalize customer experiences, optimize marketing campaigns, and enhance overall customer satisfaction.

In conclusion, a data lake serves as a central repository for all types of data, providing organizations with the ability to store, process, and analyze vast amounts of information. Its flexibility, scalability, and capacity to accommodate both structured and unstructured data make it a crucial tool for uncovering insights, driving innovation, and improving decision-making processes. By leveraging the power of a data lake, businesses can take advantage of their data assets, improve productivity, and fuel corporate growth.

Text AWS to (415) 890-6431

Text us and join the 700+ developers that have chosen to opt-in to receive the latest AWS insights directly to their phone. Don’t worry, we’ll only text you 1-2 times a month and won’t send you any promotional campaigns - just great content!