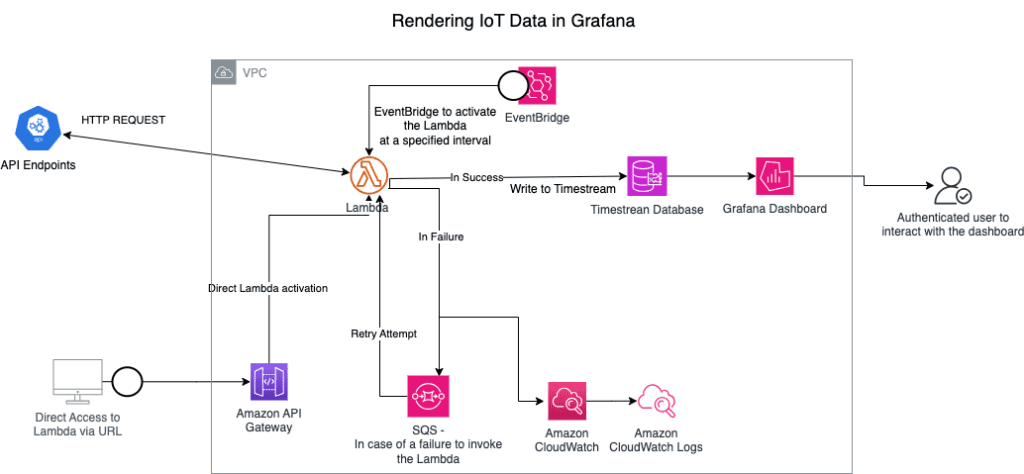

AllCode frequently receives requests from clients to visualize IoT Telemetry data from devices. Most clients initially want us to build out a Proof of Concept (PoC), which pings a REST API that they’ve established before investing in AWS IoT Core. For these solutions, we recommend the following architectural blueprint, which requires AWS Lambda, Amazon API Gateway, Amazon CloudWatch, Amazon Timestream DB, and AWS Managed Grafana. For signing onto the Grafana dashboard, we’ll throw in SSO through an AWS Cognito Pool to boot.

In this blueprint, we leverage the following technologies:

Grafana: The implementation of Grafana serves the purpose of creating a user-friendly dashboard to visualize the ingested data from devices. This not only enhances the user experience but also provides a powerful tool for data analysis and decision-making. In this solution, we created X quantity of SQL queries to extract, transform, and load specific data to the Grafana dashboard. We also registered X quantity of Environmental Variables to create filters for the graphics and tables included in the dashboard.

Amazon Timestream: The decision to opt for a single central database is rooted in the need for efficient data management. For devices with varying complexities, the use of X Tables caters to the diverse nature of data. Multi-measure tables are employed when devices necessitate a multitude of attributes, providing a scalable solution. Conversely, single-measure tables are chosen for less complex use cases, streamlining data storage and retrieval processes.

Lambda Functions: We’d create two distinct Lambda functions. The first Lambda is the Authentication Function, which is designed to establish a secure connection with an external API using Axios as the HTTP client, aligning with the security principle of isolating authentication logic. The second Lambda is the Data Ingestion Function, which specializes in writing records to Timestream, ensuring a clear separation of concerns within the serverless architecture.

EventBridge: The decision to integrate EventBridge is centered around activating Lambda functions through programmatically scheduled tasks. This allows for seamless orchestration and automation of workflows, ensuring timely execution of critical functions based on specified events, contributing to an efficient and event-driven architecture.

SQS: The choice to utilize Simple Queue Service (SQS) is driven by the necessity to send messages and automatically retry Lambda execution in case of failure. This ensures a robust and fault-tolerant system for handling asynchronous communication between different components of the application.

CloudWatch: Incorporating CloudWatch into the system serves the purpose of writing and maintaining logs for effective error debugging. By leveraging CloudWatch, the application gains visibility into its performance and behavior, enabling swift identification and resolution of issues through detailed log analysis.

IAM: We use Identity Access Management (IAM) to secure access to AWS resources. We’d create a dedicated user with the role LambdaBasicExecution. We’d enforce the principle of least privilege, granting only the necessary permissions for Lambda functions. We’d next create a role that includes policies for writing to Timestream (Write to Timestream) and basic Lambda execution (BasicLambdaExecution). We’d also add an EventBridgeScheduler role, equipped with the Access to Timestream policy, which facilitates the scheduling of events.

AWS Secret Manager: The inclusion of AWS Secret Manager is motivated by the need to safeguard sensitive information. This service ensures that confidential data, such as API keys or other credentials, is securely stored and easily managed, enhancing the overall security posture of the system.

In terms of source code, the above solution was written in Node.js. We leveraged Cloudformation templates to provision the AWS resources including VPC, Subnet, and Security Groups. We used sls command line to develop the Lambdas.

The development of the Lambda functions involved the creation of an asynchronous function tailored for HTTP requests, ensuring system scalability and responsiveness. This technical choice aligned with best practices in serverless architecture, allowing the system to efficiently handle concurrent requests. Simultaneously, the use of a service instance for Timestream introduced a scalable solution, optimizing resource utilization and adaptability to varying workloads. The Write to Timestream function serves as a critical component, ensuring the seamless and secure integration of data into the Timestream database. This coding approach adheres to best practices in serverless development, emphasizing efficiency, scalability, and data integrity.