AWS Load Balancer

How it Works

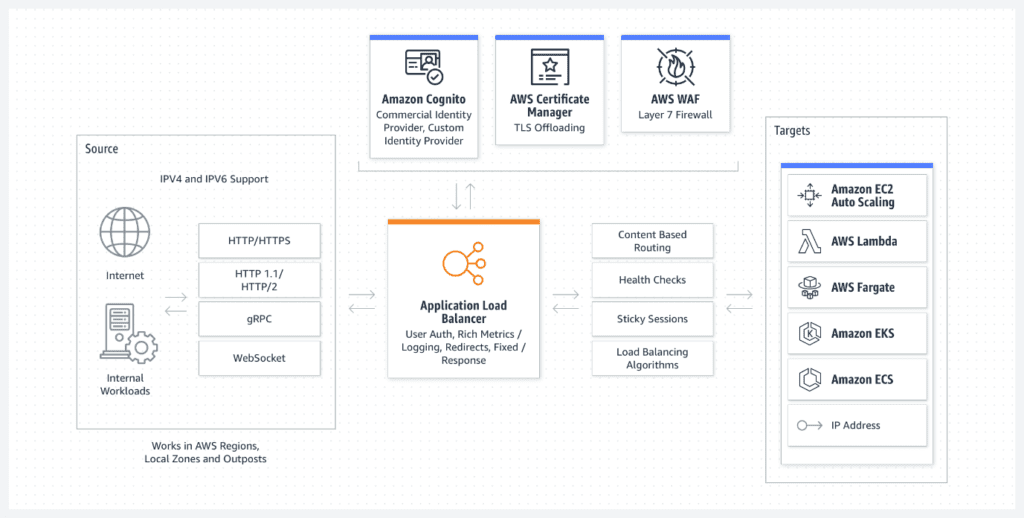

Application Load Balancer:

The content of a request is used to route traffic to specific destinations (EC2 containers, IP addresses, and Lambda functions) (layer seven). Application Load Balancer’s powerful load balancing of HTTP and HTTPS traffic greatly benefits applications such as microservices and container-based programs. Application Load Balancer always uses the latest SSL/TLS ciphers and protocols.

Features:

• Layer 7 balancing

AWS EC2 instances, microservices, and containers can be used to load balance HTTP/HTTPS traffic (such as X-Forwarded-For headers).

• Security features

Amazon VPC (Virtual Private Cloud) provides additional networking and security choices because it allows you to create and manage security groups. With AWS Elastic Load Balancer, you can support applications within an Amazon VPC for stronger network security. An IT team can specify whether it wants an internet-facing or internal load balancer. The latter option enables a developer to route traffic through an ELB using private IP addresses, enhancing security by keeping traffic within the VPC.

ALB supports Guardian-based safeguards against HTTP desync, allowing vulnerabilities linked to desync to be guarded against without impacting the service’s availability or latency. Depending on the application’s design, customers can additionally select a tolerance threshold for questionable requests.

To enhance the security posture of your load balancers, it is recommended to implement specific access control measures:

- Utilize IAM Policies to define and manage permissions effectively.

- Configure resource-level permissions to control access to load balancer resources based on roles and responsibilities.

- Employ security groups to regulate inbound and outbound traffic, ensuring that only authorized sources and destinations interact with your load balancers.

For optimal security, consider setting up internet-facing load balancers with recommended rules for inbound and outbound traffic. When configuring internal load balancers, establish access controls based on VPC CIDR blocks and instance security groups. By following these best practices, you can fortify the authentication and access control mechanisms of your load balancers, bolstering the overall security of your applications and infrastructure.

Users can privately access Elastic Load Balancing APIs from their Amazon Virtual Private Cloud (VPC) by setting up VPC endpoints. These endpoints allow direct routing between the VPC and Elastic Load Balancing APIs, eliminating the requirement for an Internet gateway, NAT gateway, or VPN connection.

• Outposts Support

The same AWS services and technologies that are now available in the cloud can be used in an on-premises or co-located hybrid environment. It is possible for customers to set up ALBs on supported instance types and have them automatically scale to the rack’s capacity without the need for any human intervention. Customers might be alerted of load balancing capacity demands. In order to provision and administer ALBs on Outposts, customers can make use of the same AWS Console, APIs, and CLI that they would in the Region itself.

• Support for HTTPS

The HTTPS connection between a load balancer and a client can be terminated at any time using this technique. SSL certificates for Application Load Balancers can be managed using AWS’ Identity and Access Management (IAM)

• Http/2 and gRPC

HTTP/2, a new version of the protocol, allows multiple requests to be made on the same connection. It compresses the header before sending data to customers. It’s possible to spread gRPC traffic among multiple microservices or clients and services using ALB. Without affecting the customers or services of consumers, gRPC traffic control can be readily added into existing infrastructures. RPC is the preferred inter-service communication mechanism in microservice designs. Some of the advantages of HTTP/2 over REST include binary serialisation and language support, as well as the inherent advantages of HTTP/2, such as decreased network footprint, compression, and bi-directional streaming.

• Offload TLS

A HTTPS listener can be used to monitor for encrypted connections (also known as SSL offload). Supports SSL Offloading, which is a feature that allows the AWS Elastic Load Balancer to bypass the SSL termination by removing the SSL-based encryption from the incoming traffic.

Securing communication between your load balancer and clients requires using SSL or TLS. ALB can be used to end a client’s TLS session. Use the load balancer to terminate TLS, but keep the source IP address of your back-end applications intact. TLS listeners can employ predefined security policies to ensure compliance and security.

To maintain track of your server certificates, you can use either AWS Certificate Manager or AWS Identity and Access Management. It is possible to serve many secure websites from a single TLS listener using SNI. If the client’s hostname matches numerous certificates, the load balancer applies a clever selection technique.

• Sticky Events

As long as there are sticky sessions in place, clients can send requests to the same destination many times. This means ALBs can work with both session-specific cookies and cookies that are tied to an individual application. How long should a user’s request be forwarded to the same load balancer target? Group sticky sessions are now possible. Use a combination of duration-based, application-based, and no stickiness across all target groups to obtain the greatest potential outcomes.

• Native IPv6 Support

In a virtual private cloud (VPC), Application Load Balancers enable native IPv6. Clients are able to connect to the ALB using either IPv4 or IPv6 as a result of this.

• Containerized Apps Help

In order to provide support for containers, an application load balancer installed on an Amazon EC2 instance may be used to load balance the traffic going into and leaving from a variety of ports. Containers that are fully controlled are the end result of establishing a comprehensive connection with Amazon’s Elastic Container Service (ECS). You are able to offer a dynamic port in the task specification for an ECS task even when a container is being scheduled to run on an EC2 instance. This is something that is not possible with other cloud services. The ECS scheduler is responsible for adding this job to the load balancer. The ECS scheduler talks with the load balancer on this port.

• Routing by Content

- The Host field, Path URL, HTTP header, HTTP method, Query string, and Source IP address are all examples of request components that an Application Load Balancer can use to determine which service should be used to fulfil a request.

- You can route to several domains from a single load balancer if the routing is based on the Host field in the HTTP header.

- Requests from clients should be routed according to the URL path specified in the HTTP header.

- HTTP headers as the basis for routing: You are able to direct the path that a client request takes based on any HTTP header value.

- You can route a client request using any method, standard or custom, supported by HTTP.

- Route a client request using a query string or query parameters.

- You are able to route a client request based on the source IP address CIDR, which is a common practice.

• Target IP addresses

Any application, regardless of whether it is hosted on AWS or on-premises, has the ability to use the IP addresses of the backends as load-balancing targets for the application. Because of this, it is now feasible to divide the load of an instance among its various interfaces and IP addresses, whichever they may be. It is possible for many apps that are executing on the same instance to share a security group as well as a port. In addition, IP addresses can be used to load balance applications that are hosted on-premises (by means of Direct Connect or VPN), peer VPCs, and EC2-Classic environments. This can be accomplished in a number of different ways (using ClassicLink). With the assistance of load balancing between resources hosted on your own premises and those hosted by Amazon Web Services (AWS), you have the ability to migrate, burst, or failover to the cloud.

• Targets for Lambda

ALBs may now deliver HTTP(S) requests from any HTTP client, including web browsers, using Amazon Lambda functions. A load balancer can be used to route requests to different Lambda functions. One HTTP endpoint can be used by both server-based and serverless apps, and this is called an Application Load Balancer (ALB). Using Lambda functions to build a full website is conceivable, as well as mixing Lambda functions with EC2 instances and containers and on-premises servers.

Download list of all AWS Services PDF

Download our free PDF list of all AWS services. In this list, you will get all of the AWS services in a PDF file that contains descriptions and links on how to get started.

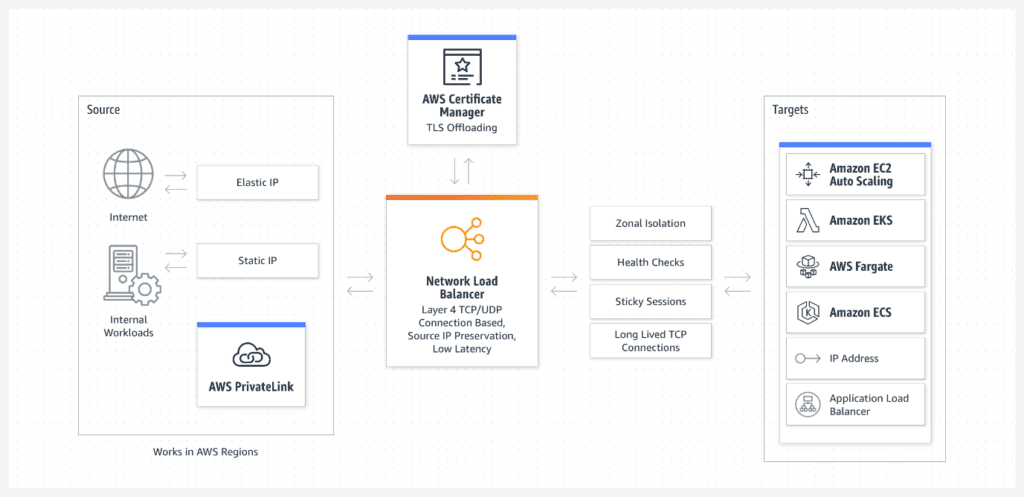

Network Load Balancer:

Connections to Amazon EC2 instances, microservices, and containers are routed by the Network Load Balancer (NLB) in the Amazon VPC. Although the Network Load Balancer maintains ultra-low latency, millions of requests per second can be handled without issue by it. Only one single static IP address is used for each Availability Zone in order to deal with traffic that is sporadic and unpredictable. Auto Scaling is compatible with CloudFormation and AWS Certificate Manager (ACM).

Additional Functions:

• Connection-based Ladder 4 balancing

Connection-based Ladder 4 balancing, which allows routing connections to targets such as Amazon EC2 instances, containers, and IP addresses based on IP protocol data.

• TLS Offloading

Offloading TLS Sessions Ensures that Clients’ TLS Session Ends Successfully. It is possible to terminate TLS and keep the back-end application’s source IP address by using a load balancer. TLS listeners can satisfy compliance and security requirements by implementing defined security policies. Additionally, Elastic Load Balancing (ELB) plays a crucial role in offloading encryption and improving security. ELB can handle the encryption and decryption processes, reducing the computational load on your compute resources. By leveraging ELB for encryption offloading, your compute resources can focus on their primary tasks, enhancing their performance and efficiency. This feature not only ensures successful TLS session completion for clients but also enhances the overall security of your system. AWS Certificate Manager and AWS Identity and Access Management services can further assist in managing your server’s certificate seamlessly, providing added convenience and reliability to your encryption process.

• Session Recurrence

Use of sticky sessions (source IP affinity) is used to route requests from the same user to the same destination. When referring to a particular target audience, the term “stickiness” is utilized.

• Short Intervals of Time

Latency-sensitive applications can benefit from Network Load Balancer’s low latencies. It is possible to serve many secure websites from the same TLS listener using SNI. A sophisticated selection mechanism is used by the load balancer if the client’s hostname matches several certificates.

• Keep track of the original Internet protocol (IP) address.

As a result of the Network Load Balancer, the back-end can see the client’s originating IP address. This data can then be used by applications to perform further processing.

• Support for a static Internet Protocol address

Applications are able to use the static IP addresses that the Network Load Balancer generates automatically for each Availability Zone (subnet) as the load balancer’s front-end addresses. These addresses are generated by the Network Load Balancer.

• Ease of use: Elastic IP

Using the Network Load Balancer, it is also feasible to assign a static IP address to each Availability Zone (subnet).

• DNS Fail-over (Domain Name System)

If there are no healthy targets registered with the Network Load Balancer in a zone or if the Network Load Balancer nodes in that zone are unhealthy, AWS Route 53 will route traffic to load balancers in other Availability Zones.

• A connection to the Amazon Route 53 service

The Route 53 integration will remove the unresponsive load balancer IP address from service and reroute traffic to a different Network Load Balancer in a different area if your network load balancer is unresponsive.

• Integration with the Amazon Web Services

Network IT is compatible with a variety of AWS services, including Auto Scaling, Elastic Container Service (ECS), CloudFormation, Elastic BeanStalk, CloudWatch, Config, CloudTrail, and CodeDeploy, among others (ACM).

• WebSocket applications

They can take advantage of the long-lasting support for TCP connections in Network Load Balancer to a significant extent

• Central API Support

A lone support network for application programming interfaces. The Application Load Balancer and the Load Balancer both use the same application programming interface to communicate with one another (API). In order for you to be able to support containerized apps, you will have the ability to interact with target groups, carry out health checks, and spread load across a number of ports on the identical Amazon EC2 instance.

• Isolation by Zones

When there is only one zone in the application design, the Network Load Balancer is the solution that needs to be implemented. The system will automatically switch to a different Availability Zone if and when something goes wrong in a zone that is otherwise in good health. Network Load Balancer can be enabled to serve architectures in a single Availability Zone even if zonal isolation is a requirement for the architecture, despite the fact that we recommend to customers that they deploy load balancers and targets in a number of different Availability Zones. This is because we believe that this is the most secure method.

Need help on AWS?

AWS Partners, such as AllCode, are trusted and recommended by Amazon Web Services to help you deliver with confidence. AllCode employs the same mission-critical best practices and services that power Amazon’s monstrous ecommerce platform.

Gateway Load Balancer:

Third-party virtual appliances can be easily managed and scaled using Gateway Load Balancer. Multiple virtual appliances may be scaled up or down independently via a single gateway, allowing for flexible traffic distribution. Reducing the number of potential failure points can improve network availability. AWS Marketplace allows you to search for, test, and purchase virtual appliances from third-party manufacturers. Whether you want to stick with your current vendors or try a new one, your virtual appliances will be easier to use right away thanks to this smooth setup process.

Features

You can enable access by logging to Automatic Load Balancer for more granular traffic analysis. This is optional and disabled by default to give you control over your logging needs. When enabled, access logs provide detailed information about incoming requests, including the time the request was received, the client’s IP address, request paths, and even the requests that did not reach your targets. Like CloudTrail, the costs associated with access logging are limited to the S3 storage used to save the logs.

Improve the security of your apps with certificate management, user authentication, and SSL/TLS decryption. Create highly available apps that can be scaled up or down on the fly. Your apps’ health and performance may be monitored in real-time to discover bottlenecks and guarantee that service level agreements (SLAs) are followed. Users can privately access Elastic Load Balancing APIs from their Amazon Virtual Private Cloud (VPC). This can be achieved by setting up VPC endpoints. These endpoints allow for direct routing between the VPC and Elastic Load Balancing APIs, eliminating the requirement for an Internet gateway, NAT gateway, or VPN connection. By utilizing VPC endpoints, users can ensure secure and private access to Elastic Load Balancing APIs within their VPC environment.”

• Auto-scale your virtual appliance instances

Gateway Load Balancer instances can be created with the assistance of Amazon Web Services Auto Scaling groups. As a direct consequence, you will never be short of resources. When traffic spikes, the Gateway Load Balancer starts additional instances. The incidents cease to occur once traffic has returned to its typical level.

In addition to automatic scaling, Gateway Load Balancer also supports health checks to ensure your application’s continuous performance and reliability. You can continually measure your Gateway Load Balancer’s health and performance using Amazon CloudWatch metrics specific to each Availability Zone.

These metrics include load balancer metrics such as the count of target appliance instances, the health status of targets, the target count, the current number of active flows, and the maximum flows. By monitoring these metrics, you can gain insights into your load balancer’s overall health and performance. VPC Endpoint metrics such as the amount of processed bytes and packets and the number of Gateway Load Balancer Endpoint mappings can also be monitored.

By leveraging these metrics, you can proactively identify any potential issues or bottlenecks in your Gateway Load Balancer and take necessary actions to optimize its performance. This ensures that your application remains highly available and responsive to incoming traffic.

• High-availability virtual appliances from third parties

The Gateway Load Balancer ensures high availability and dependability by rerouting traffic if a virtual appliance fails. It also performs regular checks on the state of each virtual appliance instance to validate its availability and assure its continued good health. When an item fails excessive tests in a row, it is designated an unhealthy appliance.

• Continually measure health and performance

Your Gateway Load Balancer could be monitored using Amazon CloudWatch metrics specific to each Availability Zone. In addition, load balancer metrics (such as the count of target appliance instances, the health status of targets, the target count, the current number of active flows, and the maximum flows) and VPC Endpoint metrics (such as the amount of processed bytes and packets) are included (such as the number of Gateway Load Balancer Endpoint mappings).

• AWS Marketplace simplifies deployment.

AWS Marketplace makes it easy to deploy a new virtual appliance, benefiting the overall user experience and ease of deployment.

• Protect the connectivity of your AWS account by utilising Gateway Load Balancer Endpoints.

Gateway Load Balancer Endpoints are new virtual private cloud (VPC) endpoints connecting traffic sources and destinations. PrivateLink technology discreetly links Internet Gateways, Virtual Private Clouds, and other network resources. Because it travels through AWS, your information will never be accessible via the public Internet.

- Authentication and Compatibiliy

ALB (Application Load Balancer) facilitates federated authentication by interfacing with identity providers that comply with the OpenID Connect (OIDC) standard, including popular services like Google, Facebook, and Amazon. The process is managed through setting up an authentication action in a listener rule, which is configured to work in conjunction with Amazon Cognito. Amazon Cognito allows the creation of user pools that manage the identities of users signing in through these OIDC-compliant providers. Additionally, AWS Serverless Application Model (SAM) can be utilized alongside Amazon Cognito to streamline the deployment and management of applications requiring federated identity capabilities.

Classic Load Balancer

The Classic Load Balancer ensures equitable distribution of connections and requests among multiple Amazon EC2 instances. However, it is important to note that CLB is designed to work exclusively with EC2-Classic software. Therefore, when working with a Virtual Private Cloud (VPC), it is recommended to use Application Load Balancer (ALB) for Layer 7 traffic and Network Load Balancer (NLB) for Layer 4 traffic.

However, it’s important to note that the Classic Load Balancer is a legacy and no longer recommended for new applications. It will be deprecated on December 31, 2022. Amazon strongly encourages customers to migrate to ALB or NLB immediately. These newer load balancers offer more features and better performance compared to CLB.

Features

• Load Balancing at Layer 4 or 7

ALB enables load balancing for HTTP and HTTPS applications using Layer 7 technologies like X-Forwarded and sticky sessions. For TCP-only apps, rigorous load balancing can be achieved at Layer 4.

• SSL offloading

The standard SSL termination process involves outsourcing application decryption, centralizing certificate administration, and encrypting data at the back-end instances. Public key authentication is an optional step. The load balancer makes available to clients the ciphers and protocols that you control.

• IPv6 Support

Both IPv4 and IPv6 are supported by the Classic Load Balancer when used with EC2-Classic networks.

Limitations and Shortcomings

The interaction between Amazon ECS (Elastic Container Service) and ALB (Application Load Balancer) centers around efficiently distributing incoming traffic across containerized applications. With ECS, operators set a desired count of running instances, which specifies the number of instances of a task that should be continuously running.

When integrated with an ECS service, an ALB can be configured to distribute incoming traffic across all tasks of a specified service, enhancing failure resilience and traffic management. This is particularly useful when tasks are defined with multiple container instances managed by ECS, as it facilitates efficient load balancing across these instances.

However, there are some limitations in the configuration and functionality. Each ECS service can be associated with only one load balancer, and within a single task definition, all the containers must reside on the same EC2 container instance, which can restrict scaling options. Furthermore, the ALB is limited to interacting with a single listener per task definition.

Despite these limitations, the ALB offers significant features advantageous for ECS tasks, such as dynamic host-port mapping, which allows ALB to direct traffic to multiple ports on the same container host. This increases flexibility in utilizing ports. Additionally, ALBs enable more refined control through path-based routing and the ability to set priority rules, which helps in directing traffic based on URL paths to various services within the ECS environment.

In essence, the synchronization between ECS and ALB provides a robust mechanism for service load balancing that supports advanced traffic distribution strategies while maintaining high availability and scalability of containerized applications.

Text AWS to (415) 890-6431

Text us and join the 700+ developers that have chosen to opt-in to receive the latest AWS insights directly to their phone. Don’t worry, we’ll only text you 1-2 times a month and won’t send you any promotional campaigns - just great content!