Amazon Neptune

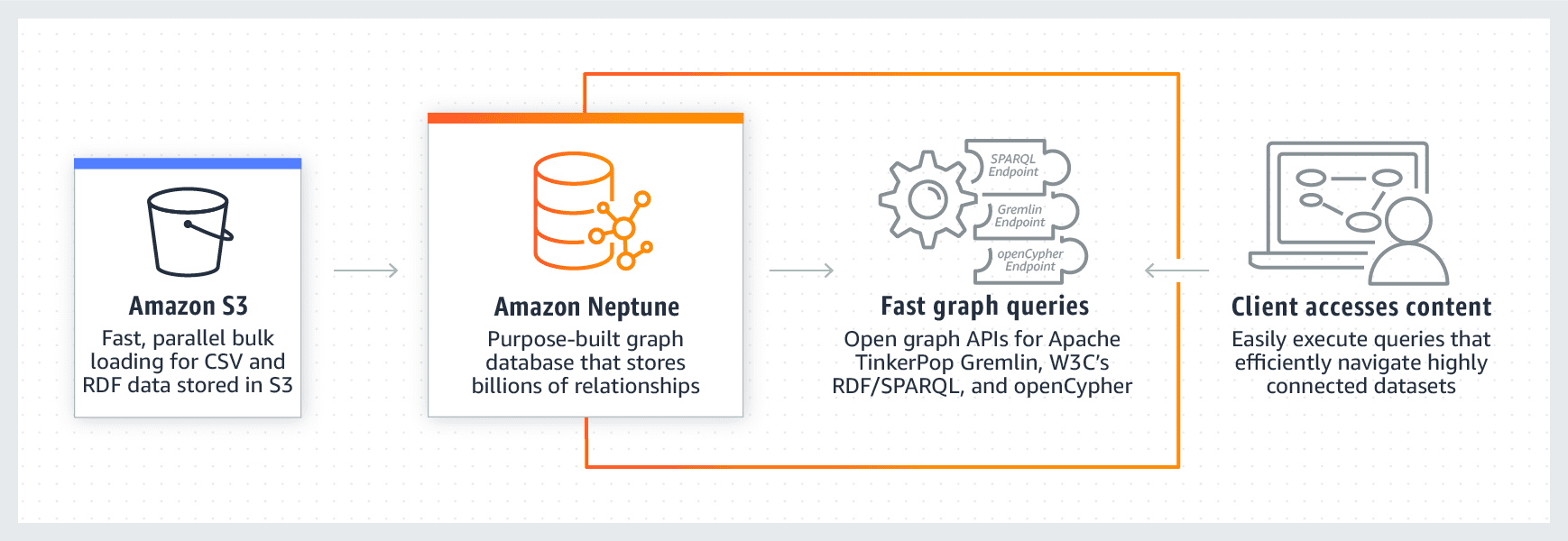

Amazon Neptune lets you build interactive graph applications that can query billions of connections in milliseconds. Complexity and difficulty in tuning SQL queries for heavily connected data are two of the most common drawbacks of this technology. Apache TinkerPop Gremlin and W3C’s SPARQL are two prominent graph query languages that you may use with Amazon Neptune instead to execute powerful searches on related data.

Using Amazon Neptune, you are able to construct interactive graph applications to query billions of connections in a matter of milliseconds. The most typical disadvantages of utilising this technology include its complexity as well as the difficulty in fine-tuning SQL searches for data that is densely related. You may run powerful searches on related data by using Amazon Neptune and one of the main graph query languages, such as Apache TinkerPop Gremlin or W3C’s SPARQL. Both of these languages are available from the W3C. When you accomplish this, your code will become simpler, and the creation of relationship-processing apps will move forward more quickly. It is anticipated that Amazon Neptune would achieve availability rates greater than 99.99 percent by combining the database engine with an SSD-backed virtualized storage layer that has been adapted specifically for database workloads. The self-healing Neptune storage is fault tolerant, and it is possible to fix disc faults in the background without having an effect on the availability of the database. The automatic identification and restart of Neptune’s databases are designed to eliminate the need for crash recovery or a full database cache rebuild in the event that either of these processes become necessary. In the event that the entire instance crashes, Neptune is designed to automatically fail over to one of up to 15 read replicas. Launching an Amazon Neptune database instance is a simple process that can be accomplished in a matter of seconds using the Neptune Management Console. Neptune automatically scales storage to provide constant performance by increasing storage capacity and rebalancing input/output operations.

Amazon Neptune is not built on a relational database. Instead, it is a high-performance graph database engine that has been specifically designed for Amazon’s platform. Neptune is optimized for storing and querying complex graph data efficiently. It utilizes a scale-up architecture that is optimized for in-memory operations, allowing it to provide quick query evaluation capabilities even for large graphs.

How it Works

Features

Effortless and Expandable

- Queries on graphs can benefit from high throughput and low latency.

It was built exclusively for Amazon and is a high-performance graph database engine To quickly evaluate queries over large graphs, Neptune uses a scale-up, in-memory optimised architecture, which effectively stores and navigates graph data. Neptune can be used with Gremlin or SPARQL to conduct fast and simple queries.

- Database Compute Resources can be easily scaled.

When scaling up or down your production cluster, you may do it with only a few clicks in the AWS Management Console. In most cases, scaling activities are completed within a few seconds.

- Automated Storage Scalability

Amazon Neptune will automatically raise the volume of your database as your storage needs grow. You have the option of using up to 64 terabytes of storage space. There’s no need to add more database storage to keep up with future expansion.

In addition to the automatic adjustment of database volume, it is worth noting that the minimum storage capacity for an Amazon Neptune database is 10GB. This ensures that you have a solid starting point for your storage needs. From there, as your usage increases, the database storage will seamlessly scale up in 10GB increments. This scalability allows your Amazon Neptune storage to grow organically without any impact on database performance.

With a maximum storage limit of 64TB, you can rest assured that your database can handle extensive data storage requirements. The incremental growth in storage capacity is designed to accommodate your evolving needs, eliminating the need for proactive planning or adding more storage space manually.

- Read Replicas with Low Latency.

Increase read throughput by creating up to 15 database read replicas for high-volume application requests. Amazon Neptune replicas offer cost savings and the avoidance of writing to replica nodes. The latency time for read requests can be reduced to single-digit milliseconds on numerous occasions by using more processing power. Applications don’t have to worry about keeping track of new and removed copies because Neptune only has one endpoint for read queries.

Download list of all AWS Services PDF

Download our free PDF list of all AWS services. In this list, you will get all of the AWS services in a PDF file that contains descriptions and links on how to get started.

Quality and Reliability

- Incident Management as well as Repair.

Your Amazon Neptune database and underlying EC2 instance are always in good shape, so you can relax. The instance that runs your database is responsible for restarting it and any associated processes. Because the database redo logs do not need to be replayed, instance restart times with Neptune recovery are often under 30 seconds. It also shields the database buffer cache from the processes of the database, allowing it to withstand a database restart.

- Using Read Replicas in Multi-AZ Deployments

In the event of an instance failure, Neptune will immediately transition to one of up to 15 Neptune replicas located in any of three Availability Zones. If there are no Neptune replicas, Neptune will attempt to automatically construct a new database instance in the event of a failure.

- Self-healing and fault-tolerant Storage

Replication occurs six times across three availability zones for every 10GB of database storage. Amazon Neptune’s fault-tolerant storage can lose up to two copies of data without affecting database read availability in order to ensure database write availability. Neptune’s storage is self-healing for all of its data blocks and discs.

- Point-in-time recovery and background incremental backups.

To recover your instance, you can use Amazon Neptune’s backup tool. You can use this method to recover your database up to the last five minutes of its retention period. You can specify a retention time for your automated backups of up to 35 days. Amazon S3, a service meant to guarantee 99.999999999 percent uptime, is used to store automated backups. The Neptune backups have no effect on database performance.

- Snapshots of the database

In Amazon S3, database snapshots are replicas of your instance that are created on demand and kept until they are permanently removed. For time and space savings, they use automated incremental snapshots. You can utilise a Database Snapshot at any time to create a new instance of the database.

Need help on AWS?

AWS Partners, such as AllCode, are trusted and recommended by Amazon Web Services to help you deliver with confidence. AllCode employs the same mission-critical best practices and services that power Amazon’s monstrous ecommerce platform.

APIs for the Open Graph

- Gremlin supports Apache TinkerPop’s Property Graph

Neptune offers robust support for querying with two powerful languages: Gremlin for Property Graphs and SPARQL for RDF models. Property graphs have recently gained popularity due to their familiarity with relational models, and Gremlin traversal language simplifies the exploration of these graphs. Amazon Neptune backs the Property Graph concept and provides a Gremlin Websockets server compatible with the latest TinkerPop version. Additionally, Neptune, an Amazon service, boasts comprehensive backup and restore functionality for databases, allowing users to easily build Gremlin traversals over property graphs by modifying the Gremlin service configuration for seamless integration into existing applications.

When managing highly connected datasets using Amazon Neptune, it is essential to consider a range of best practices to ensure optimal performance, security, and scalability. Data modeling plays a crucial role in the effective utilization of Neptune. By designing your graph model to align with anticipated queries and structuring nodes, edges, and properties efficiently, you can enhance the accessibility and retrieval speed of your data. Additionally, understanding and utilizing indexing strategically can lead to significant performance improvements. Whether it’s Gremlin queries or SPARQL queries, optimizing index usage can result in faster query execution and improved overall efficiency.

To ensure the security of your databases, Neptune allows you to produce and manage encryption keys using the AWS Key Management Service (KMS). By encrypting your Neptune databases, you can safeguard your data stored in the underlying storage and automate backups, snapshots, or replicas within the same cluster.

- W3C’s RDF 1.1 and SPARQL specifications are supported.

Neptune extends its support to W3C’s RDF 1.1 and SPARQL specifications. RDF is widely accepted in complex information domains, and Neptune enables users to access RDF datasets like Wikidata and PubChem through its SPARQL endpoint. With an HTTP REST interface, developers can effortlessly integrate Neptune’s RDF and SPARQL support into new and existing graph applications.

Exceptionally Secure

- Isolation of the Network

Security in AWS Neptune is of paramount importance, and Amazon Web Services (AWS) offers a range of robust security features to protect your data. One key aspect of security is the isolation of the network, which can be achieved by utilizing Amazon Virtual Private Cloud (VPC) and encrypted IPsec VPNs. This allows you to connect to Amazon Neptune within your own virtual network, ensuring that your database remains segregated from other resources.

Another crucial security measure is managing permissions at the resource level. With Amazon Neptune, you have the ability to govern access to specific resources such as database snapshots and parameter groups. This enables you to control the actions that AWS Identity and Access Management (IAM) users and groups can perform on these resources. By tagging your Neptune resources, you can further refine permissions based on specific criteria. For instance, you can restrict changes or deletions of “Production” database instances to only Database Administrators, while allowing developers to modify “Development” instances, all through well-defined IAM rules.

Encryption is a vital component of database security, and Amazon Neptune provides robust encryption features. You can generate and manage encryption keys through AWS Key Management Service (KMS) to safeguard your databases. This ensures that not only the data stored in the underlying storage, but also automated backups, snapshots, and replicas within the same cluster, are protected with Neptune-encrypted database instances.

- Permissions at the Resource Level

This dynamic partnership enables Neptune to leverage certain management features that ensure optimal performance and security. For instance, Neptune benefits from Amazon RDS’s expertise in instance lifecycle management, allowing seamless management and scaling of Neptune instances. Additionally, Neptune leverages encryption-at-rest capabilities with Amazon Key Management Service (KMS) keys, providing robust data protection. Moreover, Neptune takes advantage of Amazon RDS’s security groups management, ensuring that access to Neptune instances is precisely controlled and secured.

Just remember Amazon RDS permissions and resources are required to use Amazon Neptune because Neptune leverages operational technology from Amazon RDS, including instance lifecycle management, encryption-at-rest using Amazon Key Management Service (KMS) keys, and security groups management. By utilizing the capabilities of Amazon RDS, Neptune can provide high-performance graph database services that are specifically designed for use on the Amazon platform. Neptune uses operational technology shared with Amazon RDS for certain management features such as instance lifecycle management, encryption-at-rest with Amazon Key Management Service (KMS) keys, and security groups management.

- Encryption

It is possible to produce and manage the encryption keys used by Amazon Neptune to safeguard your databases using AWS Key Management Service (KMS). To ensure that any data stored in the underlying storage as well as automated backups, snapshots or replicas in the same cluster is safeguarded by Neptune-encrypted database instances.

- Auditing

Recording database events with Amazon Neptune has minimal impact on database performance. Studying logs now can help with database management, security, governance, and regulatory compliance issues in the future In addition, you can transmit audit logs to the Amazon CloudWatch service to keep track of what’s happening in your environment.

Completely Organized

- Simple to Use

It’s easy to use Amazon Neptune. Create a new Neptune database instance using the AWS Management Console. The database instance class you supplied is pre-configured in Neptune database instances. You may create a database and attach it to your app in just a few minutes. It is possible to fine-tune your database by using Database Parameter Groups.

- Operate easily

Amazon Neptune is a powerful and user-friendly graph database solution. It simplifies the process of building and managing high-performance graph databases. With Neptune’s API, you can create graph databases without the need for specific graph indexes, making it easier to work with your data. The API also provides a timeout and memory limit feature, ensuring that queries do not consume excessive memory or time out. To monitor the performance of your Neptune database instances, Amazon CloudWatch is used. This allows you to keep track of essential operational indicators such as CPU utilization, RAM usage, storage, query performance, and active connections. The AWS Management Console provides a convenient interface where you can access and analyze these metrics for your database instances running on Amazon Web Services.

Alternatively, users can quickly clone multi-terabyte database clusters. This cloning capability is beneficial for various purposes, including application development, testing, database upgrades, and analytical queries. By having immediate access to cloned data, you can efficiently perform software development tasks, updates, and analytics. The process of cloning an Amazon Neptune database is straightforward and can be done with just a few clicks in the Management Console. The clone is replicated across three Availability Zones, ensuring redundancy and high availability.

- Measuring and metric

Amazon Neptune uses Amazon CloudWatch to keep tabs on your database instances. The AWS Management Console displays over 20 critical database operational indicators, such as CPU, RAM, storage, query performance, and active connections, for database instances running on Amazon Web Services’s cloud platform.

- Auto-Patching

In order to maintain your database patched, use Amazon Neptune. It is possible to control patching with Database Engine Version Management (DEV).

- Database Event Alerts

Email and SMS alerts can be used to notify users of critical database events, such as an automated failover. Subscribing to database events can be done through AWS Management Console.

- Database Cloning

Neptune from Amazon allows for multi-terabyte database clusters to be quickly cloned in minutes. Application development, testing, database upgrades, and analytical queries can all benefit from cloning. Having immediate access to data improves software development and updates, as well as analytics.

You can clone an Amazon Neptune database with a few mouse clicks in the Management Console. Three Availability Zones are used to reproduce the clone.

Rapid Bulk Data Loading

- Bagging of Property Graphs

Amazon Neptune’s bulk loading capability makes it easy to load large amounts of data from S3. A REST interface is used to do this. CSV delimiter format is used to load data into the nodes and edges of the graph. Neptune Property Graph bulk loading has more information.

- RDF Bulk Load

It is possible to load RDF data stored in S3 using Amazon Neptune in a fast and efficient manner. A REST interface is used to do this. N-Triples (NT), N-Quads (NQ), RDF/XML, and Turtle RDF are all supported serialisations. Neptune RDF bulk loading documentation can be found here.

General Use Cases

1. Social Networking Neptune can be utilized to build social networking applications by efficiently processing large sets of user-profiles and interactions. It enables prioritizing the display of recent updates from family, friends, and user-liked content, enhancing the user experience.

2. Recommendation Engines With Neptune’s graph database capabilities, relationships between different information can be stored and queried swiftly. This functionality facilitates personalized and relevant recommendations, such as suggesting products to users based on their interests, friends’ preferences, and purchase history.

3. Fraud Detection Neptune’s real-time processing capabilities make it an excellent choice for fraud detection applications. By analyzing financial and purchase transactions, Neptune can identify patterns indicative of fraud, such as multiple people sharing the same IP address but residing at different physical addresses or multiple individuals linked to a single email address.

4. Knowledge Graphs Neptune allows the creation of knowledge graphs, which store information in a graph model and enable graph-based queries. This feature proves valuable for helping users navigate highly interconnected datasets. For example, in an art-related knowledge graph, users interested in one artwork can discover related works by the same artist or explore other pieces in the same museum.

5. Life Sciences In the life sciences field, Neptune provides a secure and efficient means of storing and querying information. It supports encryption at rest for sensitive data and can be used to model and search for relationships within biological and genetic data. For instance, Neptune can help identify potential gene-disease associations by analyzing protein pathways.

6. Network/IT Operations Neptune can be applied to network and IT operations for rapid analysis and understanding of anomalous events. By querying for graph patterns using event attributes, Neptune enables efficient identification and tracing of the root cause of issues. For example, if a malicious file is detected on a host, Neptune can assist in tracking its origin and propagation within the network.

Cost-Effectiveness

Pay Only for the Services You Utilize

Amazon Neptune pricing is designed to offer cost-effective solutions for your database needs. With Neptune, you eliminate the need for a substantial upfront expenditure, paying an hourly charge for each instance you start. This means you can create and stop instances as needed without incurring unnecessary costs. Additionally, you only pay for the storage you utilize, making it a cost-efficient option for managing your data.

When it comes to billing, Amazon Neptune ensures transparency and flexibility. No long-term commitments or upfront payments are required, allowing you to pay per hour for On-Demand instances. Discounts are available on read-write primary instances and Amazon Neptune replicas, which are utilized to boost reads and enhance failover. In terms of performance, Amazon Neptune stands out with its support for 15 read replicas, allowing for increased scalability and fault tolerance. Additionally, the database can handle 100,000s of queries per second, ensuring high throughput for demanding workloads. Neptune database storage charges are billed in GB-month increments, while I/O charges are billed in million-request increments. Remember, apart from the storage and I/Os you consume, there is no need to allocate resources in advance when using Neptune.

Storage charges are billed in GB-month increments, and I/O charges are billed in million-request increments, ensuring that you are only charged for the resources you use. Any database cluster snapshots and automated database backups customers request incur a per GB-month charge for backup storage. Data transfer charges are based on the volume of data sent in and out of the planet, providing a clear understanding of your data transfer costs.

In the Ready State, the Amazon Neptune Workbench charges by the instance hour and allows you to interact with your Neptune cluster using Jupyter notebooks hosted by Amazon SageMaker. With Amazon Neptune, you have the flexibility to scale your resources based on your needs, ensuring cost-effectiveness and efficiency in managing your database workloads

Curious About AWS Pricing?

Pricing Amazon Web Services may seem tricky, but the AWS Pricing Calculator makes it quick and easy to get an estimate!