Share

AWS Data Lake

What exactly is a data lake?

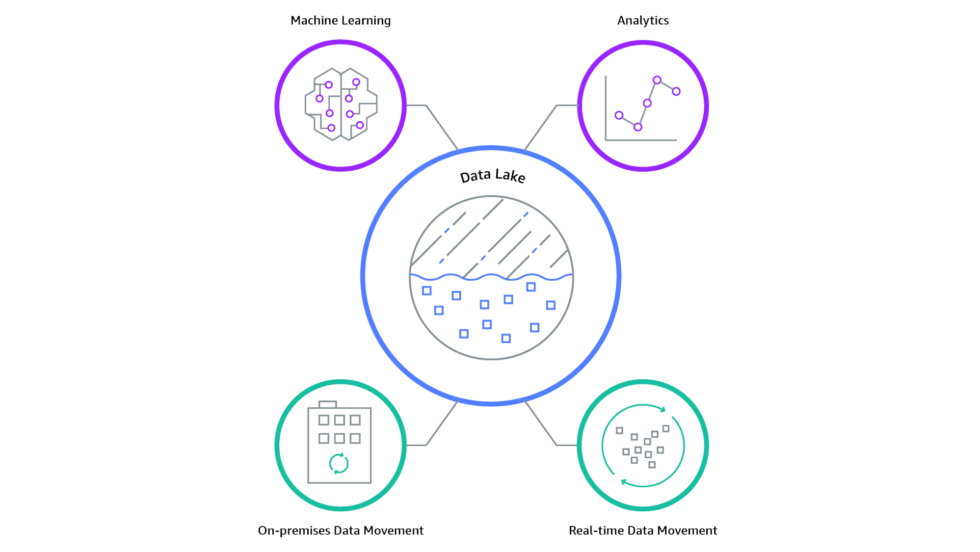

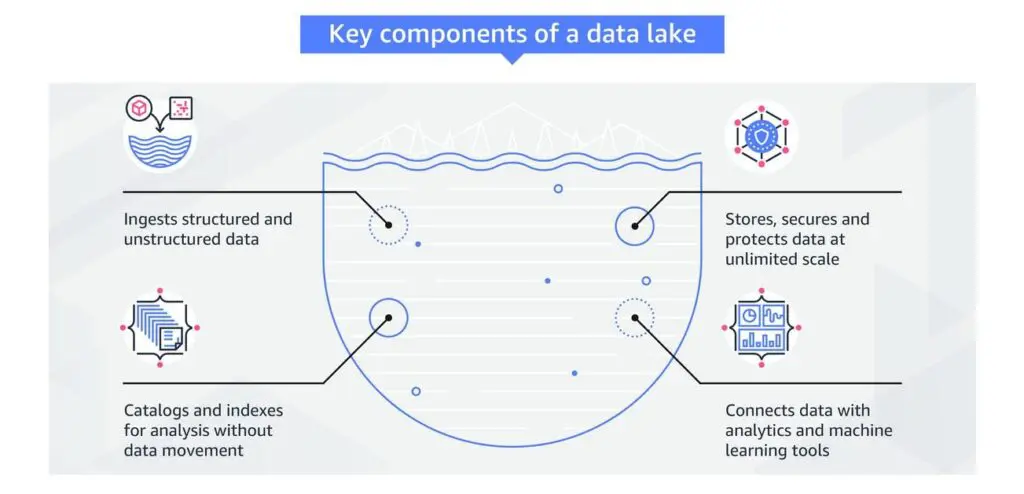

Structured or unstructured data can be stored in a data lake because it’s a centralized repository that can handle any volume. If you have a lot of data, you don’t need to organize it before performing various analytics, such as dashboards and visualisations, big data processing, real-time analytics, and machine learning.

Is a data lake really necessary, and what are the benefits?

Successful commercialization of data gives companies an edge over their competitors in the market sector. An Aberdeen analysis found that companies with a Data Lake outperformed their peers in organic sales growth by 9%. For these executives, it was able to perform new analytics like machine learning on new sources, such as click-stream logs, data from social media and internet-connected devices, and log files in the data lake. Increasing client acquisition and retention, productivity, and proactive device control and informed decision-making allowed them to more quickly identify and act on growth prospects for their organisation.

A system for storing and retrieving objects Amazon S3 is the best option for constructing a data lake because of its size and efficiency. Building and expanding a data lake of any size while maintaining 99.99999999% data security is possible using Amazon S3 (11 9s). AWS native services like big data analytics, AI/ML/HPC/ML, and media data processing can take advantage of Amazon S3 data lakes to obtain insight from unstructured data sets. Amazon Web Services (AWS) provides all of these services. You can use Amazon FSx for Lustre to launch file systems directly from your data lake while executing high-performance computing and machine learning applications. Alternatively, you can utilize Amazon Partner Network (APN) apps to execute analytics, artificial intelligence (AI), machine learning, and high-performance computing (HPC) (APN). For IT managers, storage administrators, and data scientists, S3’s extensive range of functionality can be a boon, since they can implement access controls and manage objects at scale while also analysing activities across their data lakes. Netflix and Airbnb employ S3’s tens of thousands of data lakes to keep up with their ever-increasing demands while also gaining minute-by-minute business insights. Some of the organisations that make use of Amazon S3 are FINRA and the Financial Industry Regulatory Authority.

How a Data Lake Differs from a Data Warehouse

Data lakes store vast amounts of raw, unstructured, or semi-structured data. They can hold data in its native format, which allows for greater flexibility when future analysis needs arise. These are ideal for data scientists and analysts who need to perform exploratory data analysis, real-time analytics, and machine learning. Generally, they are more cost-effective for storing large volumes of data because they leverage low-cost storage solutions. However, extracting meaningful insights can be more resource-intensive due to the unstructured nature of the data.

In contrast, data warehouses store structured data that is cleaned, transformed, and organized into schemas before being stored. This makes data warehouses superb for complex queries and report generation but requires more upfront processing. Because data remains in its raw form, data lakes support a variety of analytics tools and frameworks. Therefore, they are more suited for business intelligence and operational reporting, where data consistency, speed, and reliability are paramount. Though potentially more expensive to scale due to the need for additional computational resources to manage structured data, they offer optimized performance for analysis, ensuring quicker, more reliable results.

What is Lake Formation?

AWS Lake Formation simplifies creating a secure data lake by providing easy-to-use tools for setting up and managing data lakes. With AWS Lake Formation, users can quickly establish a secure data repository to centralize and curate data from various sources. It leverages machine learning algorithms to assist in cleaning, classifying, and organizing data within the data lake. This process helps in breaking down data silos and integrating different types of analytics, enabling users to gain valuable insights and make informed business decisions.

AWS Lake Formation can transform the process of creating a data lake from taking months to just days. This significant reduction in time is achieved through automation and streamlined workflows, allowing organizations to quickly harness the power of their data without lengthy setup times.

This service comes integrated with robust security features, including granular access controls at the column, row, and cell levels to safeguard sensitive data within the data lake. In Lake Formation, managing access controls is streamlined through a robust permissions system. The system uses grant and revoke permissions to allow administrators to define and control access at granular levels. Permissions can be adjusted on individual tables and columns rather than broader categories like buckets and objects.

This granular control mechanism ensures that only designated users have access to the necessary data, enhancing security. Administrators can easily review and modify these policies, ensuring that access rights remain up-to-date with changing requirements. Additionally, every access point and permission change is recorded, making it possible to audit data access comprehensively and conveniently from a single location. This audit trail is crucial for compliance and monitoring purposes.

Why use Amazon S3 for a data lake?

If you store 10,000,000 objects on Amazon S3, you should only expect to lose one object every 10,000 years if you use that degree of durability. The service automatically creates and stores copies of all submitted S3 items without the need for user intervention. Because of these safeguards, you can rest easy knowing that your data is always accessible and safe from errors and other risks.

- Designing for security

Make sure your data is protected with a solution designed for businesses with high data security needs.

- Instantaneous scalability

Increasing storage capacity does not necessitate long periods of resource collection.

- Resilient in the face of a total failure of the AWS Availability Zone

Your data should reside in at least three different Availability Zones (AZs). As a result, the zones are separated by a reasonable distance and there is no unnecessary lag time.

- Artificial intelligence, machine learning and media data processing are just a few of AWS’s many offerings.

You may execute applications on your data lake using AWS native services.

- Incorporation of services from third-party vendors

The APN allows you to connect your favourite analytics systems to your S3 data lake.

- Multiple data handling options are available.

When you use this solution, you can work at the object level with complete freedom, while simultaneously managing at scale and customising access, and also save money by doing audits on all of the data in an S3 data lake.

Download list of all AWS Services PDF

Download our free PDF list of all AWS services. In this list, you will get all of the AWS services in a PDF file that contains descriptions and links on how to get started.

Big data challenges can be addressed by using data lakes.

Data lakes are transforming data from a cost to an asset for organisations of all sizes and across all industries. Data lakes are essential for making sense of large amounts of data at the organisational level… Using machine learning in data lakes, data silos may be broken down and a variety of datasets can be analysed more easily while remaining safe.

Before making a decision on whether a data lake is the most suitable option for your organization, it’s essential to understand the capabilities and benefits offered by modern storage solutions like Amazon S3. Amazon S3’s limitless scalability makes it possible to move, store, manage, and secure any form of data, structured or otherwise, regardless of its structure. Amazon S3 is an illustration of an Amazon service. Your data lake can benefit from AWS services. There are a large selection of AWS analytics apps, AI and machine learning services, and high-performance file systems available to S3 data lake customers. There is no need to do additional data processing or move a data to other storage places as a result.

Your data lake can benefit from AWS services

There are a large selection of AWS analytics apps, AI and machine learning services, and high-performance file systems available to S3 data lake customers. There is no need to do additional data processing or move data to other storage places as a result. Third-party analytics and machine learning tools can be used to analyse and learn from your S3 data, as well.

When you use AWS Lake Formation, you don’t have to wait months to construct a data lake.

Simply define where data should be stored and the access rules that apply, and then use AWS Lake Formation to quickly establish an encrypted lake instead of waiting months for the process to complete. Additionally, AWS Lake Formation provides various tools including APIs, a command line interface (CLI), and Java and C++ SDKs for seamless integration of your custom applications and data engines.

Data is cleaned, cataloged, and classified using machine learning, and access restriction settings are selectable by users. Using a central database, users can obtain a list of data sets and the terms and conditions under which they can access and use them. The machine learning technology employed by Lake Formation plays a crucial role in streamlining the data cleaning process, ensuring accuracy and efficiency. Through sophisticated algorithms and automated techniques, Lake Formation leverages machine learning to enhance data quality and facilitate seamless data management.

Analyze data on Amazon Web Services (AWS) without transferring it.

For a range of use cases, the following purpose-built analytics services can be used to analyse data stored in an S3 data lake. An S3 data lake can be used to perform these operations in a time- and resource-efficient manner, without the requirement for ETL processes. Your preferred analytics systems can be accommodated in your S3 data lake.

To perform AI and Machine Learning processes on your data, put it in S3.

Build recommendation engines and analyse photographs and videos saved in S3 with AWS AI services like Amazon Comprehend, Amazon Forecast, and Amazon Personalize. Discover insights from your unstructured information using Amazon Rekognition. AWS AI services like Comprehend and Forecast, as well as Personalize and Rekognition, are simple to get started with. You may use Amazon Sagemaker to easily construct, train, and deploy models utilizing S3 datasets for machine learning models.

Lake Formation aids in the discovery of data that can be moved into a data lake by automatically scanning various AWS data sources that are accessible via AWS IAM policies. It specifically scans Amazon S3, Amazon RDS, and AWS Cloudtrail sources to identify data potentially ingestible into your data lake using predefined blueprints. This automated process ensures that only data from authorized sources is identified for ingestion. Additionally, working in conjunction with Lake Formation, AWS Glue can extract and ingest data from additional sources such as S3 and Amazon DynamoDB, expanding the range of data sources that can be integrated into your data lake.

Integrating third-party business applications such as Tableau and Looker with AWS data sources is streamlined through Amazon Athena or Amazon Redshift. This integration is managed by the underlying data catalog in Lake Formation, ensuring that regardless of the third-party tool used, data access is consistently governed and controlled.

In seconds, you can query data already stored in S3.

Data filtering and accessing within objects, which might take a long time, can be offloaded to the cloud using S3 Select by application developers. With S3 Select, you may query object metadata without moving the item to another data store. By reducing the amount of data that needs to be loaded and processed by your apps, S3 Select can improve the performance of most applications that often request data from S3. Additionally, the cost of querying can be reduced by as much as 80%.

With Spark, Hive, and Presto as well as S3 Select, all of these AWS services may be used together in the cloud.

Run high-performance programmes by connecting data to file systems.

FSx for Lustre by Amazon is optimized for the fast processing of workloads such as machine learning, high performance computing (HPC), video processing, financial modelling, and electronic design automation in addition to working natively with your S3 data lake (EDA). With an S3 file system that provides access latency of milliseconds and rates of hundreds of gigabytes per second (GBps), it can be set up in just a few minutes and used instantly (IOPS). The FSx for Lustre file system presents S3 objects as files and allows you to write results back to S3 when the file system is linked to an S3 bucket.

Manage your data lake more cost-effectively by utilising S3 capabilities.

In order to construct (or re-platform) and manage a data lake of any size and application, Amazon S3 is a robust solution. A few clicks are all it takes to make changes to tens of billions of objects, set up granular data access controls, save money by putting items in different storage classes, and audit all activity on your S3 assets. It is the only cloud storage solution that allows you to do all of these things.

- All tiers of the data lake infrastructure can be used to manage data.

With Amazon S3, you can manage objects, accounts, and buckets all at once. It is possible to utilize metadata tags to organize data in a way that is beneficial to your business. It’s also possible to organize items using prefixes and bucket systems. At the press of a button, one or more items can be replicated around the world, restricted access can be imposed, and the storage class can be altered.

- A few mouse clicks can affect billions of items.

A single API call or a few clicks in the S3 Management Console can perform operations on billions of objects with S3 Batch Actions, and you can track the progress of your queries. There are no long delays in changing or transferring object characteristics and metadata between buckets. Amazon Lambda operations, S3 Glacier archive restorations, and access controls are also available in S3.

- Keep sensitive information out of reach of the general public.

Access to select buckets and objects can be restricted using bucket rules, object tags, and ACLs. Controlling who has access to what parts of your AWS account is easy using AWS Identity and Access Management. All access requests from the outside can be blocked by configuring S3 Block Public Access for a bucket of objects or even an entire AWS account.

Lake Formation is designed to seamlessly work with AWS Identity and Access Management (IAM) to efficiently manage and enforce data protection policies specific to authenticated users and roles within an organization. By integrating with IAM, Lake Formation can automatically associate individuals and roles with the appropriate data protection policies stored in the data catalog. This integration streamlines the process of mapping permissions and access controls, ensuring that users can access data based on their identity and role.

- You can save money by storing data across many S3 Storage Classes.

The cost of storing data in S3’s six distinct storage classes, as well as the ease with which it can be accessed, are very variable. You can learn more about how your data is being used with S3 Storage Class Analysis. To save money, you can use S3 Glacier or S3 Glacier Deep Archive for less often accessed objects by establishing lifespan policies for them.

- All S3 resource requests and other activity should be scrutinized.

It is highly suggested that all S3 resource requests be reviewed, as well as any other actions. Reporting tools for S3 allow you to monitor the use of your S3 resources (such as object metadata such as retention date, business unit, and encryption status), keep track of costs and usage patterns for each user, and more. Using these insights, you may make adjustments to your data lake and the apps that make use of it, which could result in cost savings.

Need help on AWS?

AWS Partners, such as AllCode, are trusted and recommended by Amazon Web Services to help you deliver with confidence. AllCode employs the same mission-critical best practices and services that power Amazon’s monstrous ecommerce platform.