Break Through with Big Data on AWS

Big Data (Quick Explanation):

Big data describes a large volume of data that is captured through various sources that can be used to make educated business decisions.

Companies of all sizes generate valuable data to use for increasing customer satisfaction, raising profitability, and reducing costs. Data insights refine your vision and are an essential tool that leads to confident, proactive, evidence-based decisions.

When your data surpasses the capacity of traditional databases, performance is constrained, thus limiting your business’s efficacy. Therefore, the urgency for maintaining the quality and integrity of this digital goldmine.

Overview

• Big data and the three Vs

• Types of data

• Why it matters

• Challenges

• Collecting data online

• AWS services

• Big data partner

What is big data?

Big data describes a mass of structured and unstructured complex information that businesses gather and use to address diverse issues. Volume, velocity, and variety define the fundamental components for capturing and processing exceptional data aggregates.

Adaptability holds great significance in the field of big data due to the rapid advancements and constant evolution of this domain. In order to thrive, a sustainable data team must foster a culture of open-mindedness and continuous learning. To truly excel and innovate in the field of big data, professionals must develop the ability to surpass existing knowledge and instead, embrace the ever-changing landscape of possibilities in preparation for the future. By adapting to new technologies, methodologies, and trends, data professionals can remain at the forefront of their industry, effectively leveraging the power of big data to drive meaningful insights and transformative outcomes. Therefore, adaptability is not just important but essential in order to harness the full potential of big data and stay ahead in this dynamic and competitive environment.

Three Vs of big data

Volume

Social media companies like Facebook produce an exorbitant amount of data derived from photos, videos, posts, etc. Such a load exceeds the threshold of traditional databases, requiring an efficient, sustainable repository.

Velocity

Velocity refers to the speed at which data is generated. Big data enables the rapid processing of incoming data flow so as to avoid congestion.

Variety

This element concerns incoming structured and unstructured data like images or videos and human-generated data like texts, emails, or voice messages. The data can be distributed into detailed categories, enabling comprehensive storage and streamlined management.

Types of data

Descriptive analytics help users identify what happened and why. An example of this type of analytics is traditional query and reporting environments with scorecards and dashboards.

Predictive analytics determine the likelihood of an event happening in a specific feature, such as premature alert systems, fraud discovery, preventive maintenance apps, and forecasting.

Prescriptive analytics offer specific recommendations based on data. They help address variables; what approach should I take if X, Y, or Z happens?

Why does big data matter?

Capturing data about how people interact with your product or service yields valuable insight for assessing your business’s plans and progress. If customers engage with X but not with Y, there’s an identifiable reason which analytics can parse instantly for a rational course adjustment.

Big data gathers this key information in order for you to make deft decisions from undigested offerings, leading to improved quality, customer loyalty, constant referrals, increased ROI, etc. Without this information, you’re essentially taking a shot in the dark at how to make your business prosper.

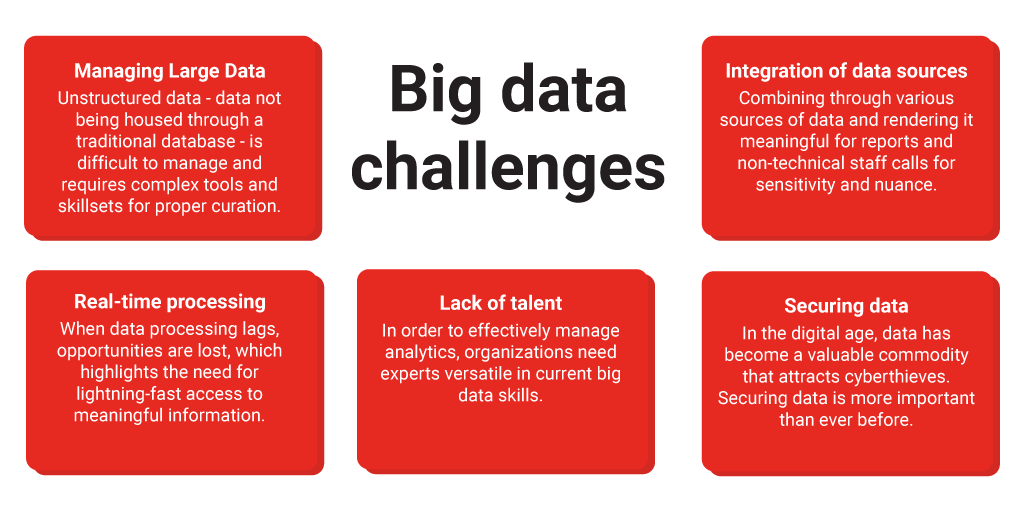

Big data on AWS challenges

Big data challenges include storing and analyzing voluminous, rapidly growing, diverse data and deciding how to properly manage it. Data production on a daily basis has reached an astounding level. Presently, it is estimated that approximately 2.5 quintillion bytes of data are generated each day. This immense figure highlights the substantial volume of data that is being produced in the modern world. Failure to appropriately address these complications results in escalating costs and diminished productivity.

Managing large data

As our way of life moves online, data’s role is increasingly vital. Unstructured data - data not being housed through a traditional database - is difficult to manage and requires complex tools and skill sets for proper curation.

Real-time processing

When data processing lags, opportunities are lost, which highlights the need for lightning-fast access to meaningful information. Real-time processing delivers accurate results in fractions of a second, helping you maintain data-driven decisions.

A Data Scientist possesses a range of additional skills beyond spotting trends and patterns in data. They have the capability to not only build predictive models, but also develop machine learning algorithms that continually learn from data to produce accurate forecasts. In order to excel in their role, a Data Scientist should have a strong foundation in statistics and critical thinking. Additionally, they must possess a proficient understanding of programming languages such as Python, R, SAS, SQL, and Scala. Furthermore, they should be adept at handling and analyzing both structured and unstructured data, as well as possessing the ability to effectively visualize the insights derived from the data.

Lack of talent

In order to effectively organize and manage analytics, organizations need experts versatile in current big data skills. These professionals are in high demand, earning appreciable salaries ranging from $135,000 to $196,000 dollars, depending on location and experience.

Integration of disparate data sources

Data emerges from a variety of sources, including social media, email, documents, etc. Combing through all of this data and rendering it comprehensible for reports and non-technical staff calls for sensitivity and nuance.

Securing data

In the digital age, data has become a valuable commodity that attracts cyberthieves — knowledge is power! Thus, the need for big data repositories or analyses to depend on a bulwark of additional security measures to protect this sensitive and invaluable data.

Data Cleaning

A data hygienist plays a vital role in a big data team, ensuring that the collected data is accurate, complete, and relevant. With the massive amount of data generated by businesses, their partners, and their customers, it is crucial to have someone who can sort, sift, and scrub the data to ensure that analytical efforts are not wasted on unreliable or useless information. It is critical that the data being analyzed is reliable and of high quality. Through their meticulous attention to detail, they contribute to the overall success of the big data team, enabling accurate decision-making and uncovering valuable insights from the vast amount of data available.

Ways to collect data online

Online Tracking

Track customer interaction through your website or application. Customers can generate up to 40 data points, such as time spent on your site, where they clicked, and much more. Use this information to hone your presence and messaging.

Transactional Data Tracking

If you sell goods or services online, you can store transactional records. This information determines consumption patterns, helping you target ads and offer deals at the right time and place to the ideal audience.

Marketing Analytics

Track how people are interacting with your ad campaigns or social media content. If your public engages with a specific piece of content, you’ll know where and how to target more of that material for maximum response.

Subscription or Registration

Ask for basic information about your customer, e.g., email, first and last name, and phone number during registration to acquire essential information for targeted marketing campaigns and improving the user experience with personalized messaging.

AWS big data analytics services

When existing databases and applications struggle to scale and support sudden influx in data, big data AWS services fill the breach. These services are designed to efficiently process insights to accelerate reliable decision making.

Analytics

Interactive Analytics

Easily analyze data in Amazon S3 using standard SQL with Amazon Athena.

Data warehousing

Amazon Redshift enables you to run SQL and analytic queries against structured and unstructured data without moving data.

Big data processing

Amazon EMR, lets you efficiently process vast quantities of data for data engineering, data science development, and collaboration.

Real-time analytics

Leverage Amazon Kineses to collect, process, and analyze streams of data as it reaches your data lake, giving you on-the-spot responsiveness.

Operational analytics

Elasticsearch is your top pick to evaluate, refine, aggregate, and visualize data in near real-time for application monitoring, log analytics, and clickstream analytics.

Dashboards and visualizations

Effortlessly deliver insights company-wide using Amazon Quicksight.

Data movement

Real-time data movement

By leveraging Amazon Kinesis and capabilities, manage data streams and receive instant analytics.

Data lakes

Object storage

Store and receive any amount of data from anywhere by harnessing Amazon S3.

Backup and archive

With Amazon S3 Glacier, securely, reliably, and cost-consciously manage storage classes for data archiving and extended backup.

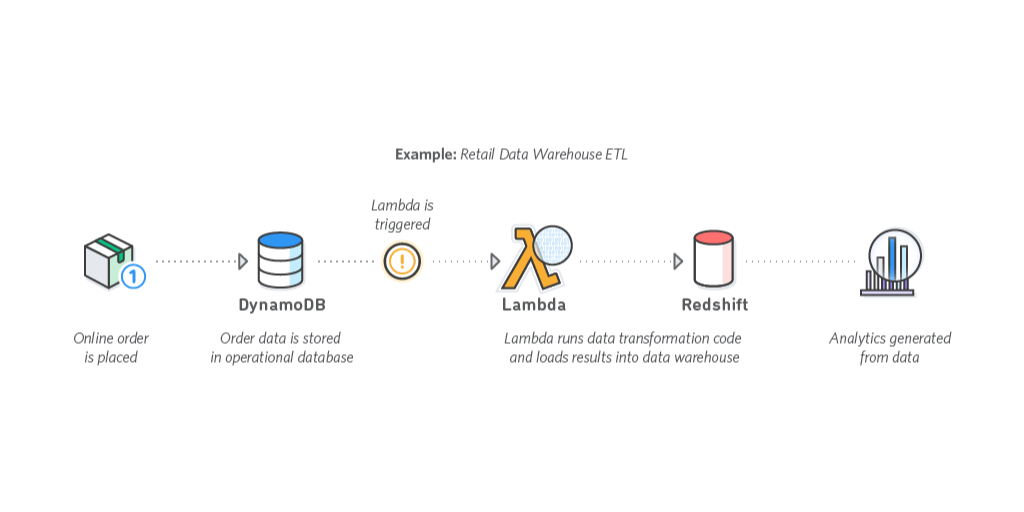

Data catalog

AWS Glue enables you to extract, transform, and load (ETL) service that makes it simple for customers to process data analytics.

Third-party data

Using AWS Data Exchange, find and subscribe to third-party data.

Predictive analytics and machine learning

Frameworks and interfaces

Accelerate deep learning at any scale in the cloud with AWS Deep Learning.

Platform services

Amazon Sagemaker: Develop, train, and deploy mach

Monitoring data

All-in-one monitoring

Using DataDog, monitor all of your data services on one platform at any scale.

Big data partner on AWS

AllCode partners with Amazon Web Services to save you time in planning, forecasting, and making better business decisions.

“Businesses that want to harness the power of big data can rely on Amazon Web Services (AWS) for a diverse range of tools and services. AWS offers a comprehensive suite of solutions to address the challenges of storing, analyzing, and managing voluminous, rapidly growing, and diverse data.

One of the key offerings is Amazon Athena, an interactive analytics service that allows users to analyze data stored in Amazon S3 using standard SQL queries. With its serverless architecture, Athena eliminates the need for infrastructure setup and maintenance, enabling users to focus solely on data analysis. For data warehousing needs, AWS provides Amazon Redshift, a fully managed, petabyte-scale data warehouse. Redshift allows businesses to efficiently store and query large volumes of structured and semi-structured data, providing fast and scalable performance for complex analytical queries.

When it comes to big data processing, Amazon EMR stands out as a powerful solution. EMR is a managed cluster platform that simplifies the process of running big data frameworks such as Apache Spark and Hadoop. It offers a flexible and scalable environment for processing vast amounts of data, enabling businesses to derive valuable insights quickly. For real-time analytics, AWS offers Amazon Kinesis, a fully managed service that makes it easy to collect, process, and analyze streaming data in real-time. With Kinesis, businesses can build real-time dashboards, gain insights from IoT devices, and monitor application logs, among other use cases.

To facilitate data movement, AWS provides Amazon S3, a highly scalable and secure object storage service. S3 allows businesses to store and retrieve any amount of data from anywhere on the web, making it ideal for backup, archiving, and data lake implementations. For deep archival, AWS offers Amazon S3 Glacier, which provides low-cost storage options for long-term data retention. AWS also understands the importance of data cataloging, and that’s where AWS Glue comes in. Glue is a fully managed extract, transform, and load (ETL) service that makes it easy to prepare and catalog data for analytics. It automates the process of discovering, cataloging, and transforming data, allowing businesses to focus on deriving insights rather than data preparation.

AllCode, a trusted big data partner on AWS, offers a diverse portfolio of services designed to assist businesses in planning, forecasting, and making data-driven decisions. With their expertise and AWS’s robust suite of big data solutions, businesses can unlock the full potential of their data and drive innovation. Find out what our diverse portfolio of big data services can do for you!

Related Articles